Pyrometer is derived from the Greek root pyro, meaning fire. The term pyrometer was originally used to denote a device capable of measuring temperatures of objects above incandescence, objects bright to the human eye. The original infrared pyrometers were non-contacting optical devices which intercepted and evaluated the visible radiation emitted by glowing objects.

A modern and more correct definition would be any non-contacting device intercepting and measuring thermal radiation emitted from an object to determine surface temperature. Thermometer, also from a Greek root thermos, signifying hot, is used to describe a wide assortment of devices used to measure temperature. Thus a pyrometer is a type of infrared thermometer. The designation radiation thermometer has evolved over the past decade as an alternative to optical pyrometer. Therefore the terms infrared pyrometer and radiation thermometer are used interchangeably by many references.

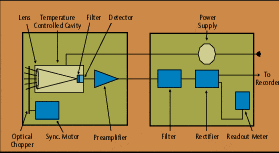

A radiation thermometer, in very simple terms, consists of an optical system and detector. The optical system focuses the energy emitted by an object onto the detector, which is sensitive to the radiation. The output of the detector is proportional to the amount of energy radiated by the target object (less the amount absorbed by the optical system), and the response of the detector to the specific radiation wavelengths. This output can be used to infer the objects temperature. The emittivity, or emittance, of the object is an important variable in converting the detector output into an accurate temperature signal.

Infrared optical pyrometers, by specifically measuring the energy being radiated from an object in the 0.7 to 20 micron wavelength range, are a subset of radiation thermometers. These devices can measure this radiation from a distance. There is no need for direct contact between the radiation thermometer and the object, as there is with thermocouples and resistance temperature detectors (RTDs). Radiation pyrometers are suited especially to the measurement of moving objects or any surfaces that can not be reached or can not be touched.

But the benefits of radiation thermometry have a price. Even the simplest of devices is more expensive than a standard thermocouple or resistance temperature detector (RTD) assembly, and installation cost can exceed that of a standard thermowell. The devices are rugged, but do require routine maintenance to keep the sighting path clear, and to keep the optical elements clean. Pyrometer systems used for more difficult applications may have more complicated optics, possibly rotating or moving parts, and microprocessor-based electronics. There are no industry accepted calibration curves for radiation thermometers, as there are for thermocouples and RTDs. In addition, the user may need to seriously investigate the application, to select the optimum technology, method of installation, and compensation needed for the measured signal, to achieve the performance desired.

What is emissivity, Emittance and the N Factor?

In an earlier chapter, emittance was identified as a critical parameter in accurately converting the output of the detector used in a radiation thermometer into a value representing object temperature. V (T) = e K TN

The terms emittance and emissivity are often used interchangeably. There is, however, a technical distinction. Emissivity refers to the properties of a material; emittance to the properties of a particular object. In this latter sense, emissivity is only one component in determining emittance. Other factors, including shape of the object, oxidation and surface finish must be taken into account.

The apparent emittance of a material also depends on the temperature at which it is determined, and the wavelength at which the measurement is taken. Surface condition affects the value of an object's emittance, with lower values for polished surfaces, and higher values for rough or matte surfaces. In addition, as materials oxidize, emittance tends to increase, and the surface condition dependence decreases. Representative emissivity values for a range of common metals and non-metals at various temperatures are given in the tables starting on p. 72.

THE BASIC EQUATION USED TO DESCRIBE THE OUTPUT OF A RADIATION THERMOMETER IS:

Where:

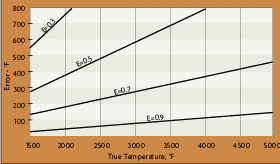

A radiation thermometer with the highest value of N (shortest possible equivalent wavelength) should be selected to obtain the least dependence on target emittance changes. The benefits of a device with a high value of N extends to any parameter that effects the output V. A dirty optical system, or absorption of energy by gases in the sighting path, has less effect on an indicated temperature if N has a high value.

The values for the surface emissivities of almost all substances are known and published in reference literature.

However, the emissivity determined under laboratory conditions seldom agrees with actual emittance of an object under real operating conditions. For this reason, one is likely to use published emissivity data when the values are high.

As a rule of thumb, most opaque non-metallic materials have a high and stable emissivity (0.85 to 0.90). Most unoxidized, metallic materials have a low to medium emissivity value (0.2 to 0.5). Gold, silver and aluminum are exceptions, with emissivity values in the 0.02 to 0.04 range. The temperature of these metals is very difficult to measure with a radiation thermometer.

One way to determine surface emissivity experimentally is by comparing the radiation thermometer measurement of a target with the simultaneous measurement obtained using a thermocouple or RTD. The difference in readings is due to the emissivity, which is, of course, less than one. For temperatures up to 500°F (260°C) emissivity values can be determined experimentally by putting a piece of black masking tape on the target surface. Using a radiation pyrometer set for an emissivity of 0.95, measure the temperature of the tape surface (allowing time for it to gain thermal equilibrium). Then measure the temperature of the target surface without the tape. The difference in readings determines the actual value for the target emissivity.

Many instruments now have calibrated emissivity adjustments. The adjustment may be set to a value of emissivity determined from tables or experimentally, as described in the preceding paragraph. For highest accuracy, independent determination of emissivity in a lab at the wavelength at which the thermometer measures, and possibly at the expected temperature of the target, may be necessary.

Emissivity values in tables have been determined by a pyrometer sighted perpendicular to the target. If the actual sighting angle is more than 30 or 40 degrees from the normal to the target, lab measurement of emissivity may be required.

Emissivity values in tables have been determined by a pyrometer sighted perpendicular to the target. If the actual sighting angle is more than 30 or 40 degrees from the normal to the target, lab measurement of emissivity may be required.

In addition, if the radiation pyrometer sights through a window, emissivity correction must be provided for energy lost by reflection from the two surfaces of the window, as well as absorption in the window. For example, about 4% of radiation is reflected from glass surfaces in the infrared ranges, so the effective transmittance is 0.92. The loss through other materials can be determined from the index of refraction of the material at the wavelength of measurement.

The uncertainties concerning emittance can be reduced using short wavelength or ratio radiation thermometers. Short wavelengths, around 0.7 microns, are useful because the signal gain is high in this region. The higher response output at short wavelengths tends to swamp the effects of emittance variations. The high gain of the radiated energy also tends to swamp the absorption effects of steam, dust or water vapor in the sight path to the target. For example, setting the wavelength at such a band will cause the sensor to read within +/-5 to +/-10 degrees of absolute temperature when the material has an emissivity of 0.9 (+/-0.05). This represents about 1% to 2% accuracy.