The sensations of hot and cold are fundamental to the human experience, yet finding ways to measure temperature has challenged many great minds. It’s unclear if the ancient Greeks or Chinese had ways to measure temperature, so as far as we know, the history of temperature sensors began during the Renaissance.

The Measurement Challenge

Robert Hooke

Robert Hooke

Ole Roemer

Ole Roemer

Heat is a measure of the energy in a body or material — the more energy, the hotter it is. But unlike physical properties of mass and length, it’s been difficult to measure. Most methods have been indirect, observing the effect that heat has on something and deducing temperature from this.

Creating a scale of measurement has been a challenge, too. In 1664, Robert Hooke proposed the freezing point of water be used as a zero point, with temperatures being measured from this. Around the same time, Ole Roemer saw the need for two fixed points, allowing interpolation between them. The points he chose were Hooke’s freezing point and also the boiling point of water. This, of course, leaves open the question of how hot or cold things can get.

That was answered by Gay-Lussac and other scientists working on the gas laws. During the 19th century, while investigating the effect of temperature on gas at a constant pressure, they observed that volume rises by the fraction of 1/267 per degree Celsius, (later revised to 1/273.15). This led to the concept of absolute zero at minus 273.15°C.

Observing Expansion: Liquids and Bimetals

Galileo is reported to have built a device that showed changes in temperature sometime around 1592. This appears to have used the contraction of air in a vessel to draw up a column of water, the height of the column indicating the extent of cooling. However, this was strongly influenced by air pressure and was little more than a novelty.

The thermometer as we know it was invented in 1612 in what is now Italy by Santorio Santorii. He sealed liquid inside a glass tube, observing how it moved up the tube as it expanded. A scale on the tube made it easier to see changes, but the system lacked precise units.

Working with Roemer was Daniel Gabriel Fahrenheit. He began manufacturing thermometers, using both alcohol and mercury as the liquid. Mercury is ideal, as it has a very linear response to temperature change over a large range, but concerns over toxicity have led to reduced use. Other liquids have now been developed to replace it. Liquid thermometers are still widely used, although it is important to control the depth at which the bulb is immersed. Using a thermowell helps ensure good heat transfer.

The bimetallic temperature sensor was invented late in the 19th century. This takes advantage of the differential expansion of two metal strips bonded together. Temperature changes create bending that can be used to activate a thermostat or a gauge similar to those used in gas grills. Accuracy is low — perhaps plus or minus 2 degrees — but these sensors are inexpensive, so they have many applications.

Galileo Galilei

Galileo Galilei

Santorio Santorii

Santorio Santorii

Burial Plaque of Daniel Gabriel Fahrenheit

Burial Plaque of Daniel Gabriel Fahrenheit

Thermoelectric Effects

Early in the 19th century, electricity was an exciting area of scientific investigation, and scientists soon discovered that metals varied in their resistance and conductivity. In 1821, Thomas Johann Seebeck discovered that a voltage is created when the ends of dissimilar metals are joined and placed at different temperatures. Peltier discovered that this thermocouple effect is reversible and can be used for cooling.

In the same year, Humphrey Davey demonstrated how the electrical resistivity of a metal is related to temperature. Five years later, Becquerel proposed using a platinum-platinum thermocouple for temperature measurement, but it took until 1829 for Leopoldo Nobili to actually create the device.

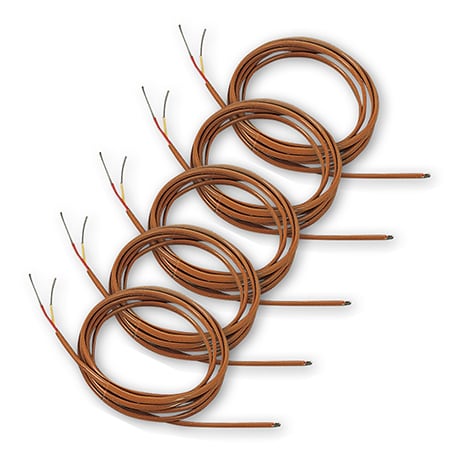

Platinum is also used in the resistance temperature detector invented in 1932 by C.H. Meyers. This measures the electrical resistance of a length of platinum wire and is generally considered the most accurate type of temperature sensor. RTDs using wire are by nature fragile and unsuitable for industrial applications. Recent years have seen the development of film RTDs, which are less accurate but more robust.

The 20th century also saw the invention of semiconductor temperature measurement devices. These respond to temperature changes with good accuracy but until recently lacked linearity.

Thermal Radiation

Samuel Langley

Samuel Langley

William Herschel

William Herschel

Very hot and molten metals glow, giving off heat and visible light. They radiate heat at lower temperatures, too, but at longer wavelengths. English astronomer William Herschel was the first to recognize, around 1800, that this “dark” or infrared light causes heating. Working with his compatriot Melloni, Nobili found a way to detect this radiated energy by connecting thermocouples in a series to make a thermopile.

This was followed in 1878 by the bolometer. Invented by American Samuel Langley, it used two platinum strips, one of which was blackened, in a Wheatstone bridge arrangement. Heating by infrared radiation caused a measurable change in resistance.

Bolometers are sensitive to infrared light across a wide range of wavelengths. In contrast, the photon detector-type devices developed since the 1940s tend to respond only to infrared in a limited wave band. Lead sulfide detectors are sensitive to wavelengths up to 3 microns while the discovery of HgCdTe ternary alloy in 1959 opened the door to detectors tailored to specific wavelengths.

Today, inexpensive infrared pyrometers are used widely, and thermal cameras are finding more applications as their prices drop.

Temperature Scales

Lord Kelvin

Lord Kelvin

Anders Celsius

Anders Celsius

When Fahrenheit was making thermometers, he realized he needed a temperature scale. He set the freezing point of salt water at 30 degrees and its boiling point 180 degrees higher. Subsequently, it was decided to use pure water, which freezes at a slightly higher temperature, giving us freezing at 32°F and boiling at 212°F.

A quarter century later, Anders Celsius proposed the 0 to 100 scale, which today bears his name. Later, seeing the benefit in a fixed point at one end of the scale, William Thomson, later Lord Kelvin, proposed using absolute zero as the starting point of the Celsius system. That led to the Kelvin scale, used today in the scientific field.

Today, temperature measurement scales are defined in a document titled International Temperature System 90, or ITS-90 for short. Readers that would like to check or better understand their measurement units should obtain a copy.